Introduction to Linear Algebra

What Is Linear Algebra?

Linear algebra is a branch of mathematics that deals with vectors, matrices, vector spaces, linear mappings, and systems of linear equations. It analyzes how linear functions map vectors while preserving addition and scalar multiplication. It helps solve equations, represent geometric transformations, and model real-world systems.

It solves linear equations used in physics, engineering, and computer science. It provides the foundation for computational mathematics, data analysis, and artificial intelligence.

Linear algebra supports geometry, calculus, and computational algorithms. It simplifies complex equations by breaking them into linear components. It models multidimensional spaces and enables efficient data processing.

Historical Origins

Linear algebra has roots in ancient mathematics. Chinese and Babylonian scholars solved simultaneous equations using early matrix-like methods. In the 17th century, René Descartes introduced coordinate geometry, linking algebra with spatial representation.

In the 18th century, Gabriel Cramer developed determinant-based solutions for linear systems. Carl Friedrich Gauss later refined Gaussian elimination, a fundamental method for solving equations. In the 19th century, Hermann Grassmann introduced abstract vector spaces, while Arthur Cayley formalized matrix algebra.

Modern developments expanded linear algebra into higher dimensions and computational applications. It now plays a critical role in numerical analysis, theoretical physics, and artificial intelligence.

Basic Building Blocks of Linear Algebra

Linear algebra relies on vectors, scalars, and coordinate systems. It represents quantities, performs operations, and structures spatial data. It forms the foundation for physics, engineering, and data science.

1: Vectors

Vectors represent quantities with magnitude and direction. They describe motion, force, and physical phenomena. They appear as arrows, where length shows magnitude and the arrowhead indicates direction.

- Scalar and Vector Quantities – Scalars have only magnitude, such as temperature and mass. Vectors have both magnitude and direction, such as velocity and acceleration. Scalars represent single values, while vectors define movement in space.

- Vector Operations – Vector addition connects two vectors head-to-tail. The resultant vector spans from the first tail to the second head. Vector subtraction reverses direction by adding the opposite vector. Scaling changes magnitude by multiplying a vector by a scalar.

2: Scalars vs. Vectors

Scalars define one-dimensional quantities without direction. Vectors describe multi-dimensional motion with magnitude and direction. Scalars remain unchanged by rotation, while vectors shift with orientation.

3: Coordinate Systems

Coordinate systems define vector positions in space. They describe locations using numerical values. They support mathematical operations in geometry, physics, and engineering.

4: 2D and 3D Coordinate Representations

A 2D coordinate system uses x and y axes. It represents points on a flat plane. A 3D coordinate system includes an additional z-axis. It defines positions in three-dimensional space.

- Cartesian Coordinate System – The Cartesian system represents vectors with numerical components. In 2D, a vector appears as (x, y). In 3D, it extends to (x, y, z). It allows vector addition, subtraction, and scaling using algebraic formulas.

- Vector Representation in Cartesian Coordinates – Vector addition follows (x₁, y₁) + (x₂, y₂) = (x₁ + x₂, y₁ + y₂). Vector subtraction follows (x₁, y₁) − (x₂, y₂) = (x₁ − x₂, y₁ − y₂). Scaling follows c(x, y) = (cx, cy). Cartesian vectors simplify complex spatial calculations.

Core Concepts of Linear Algebra

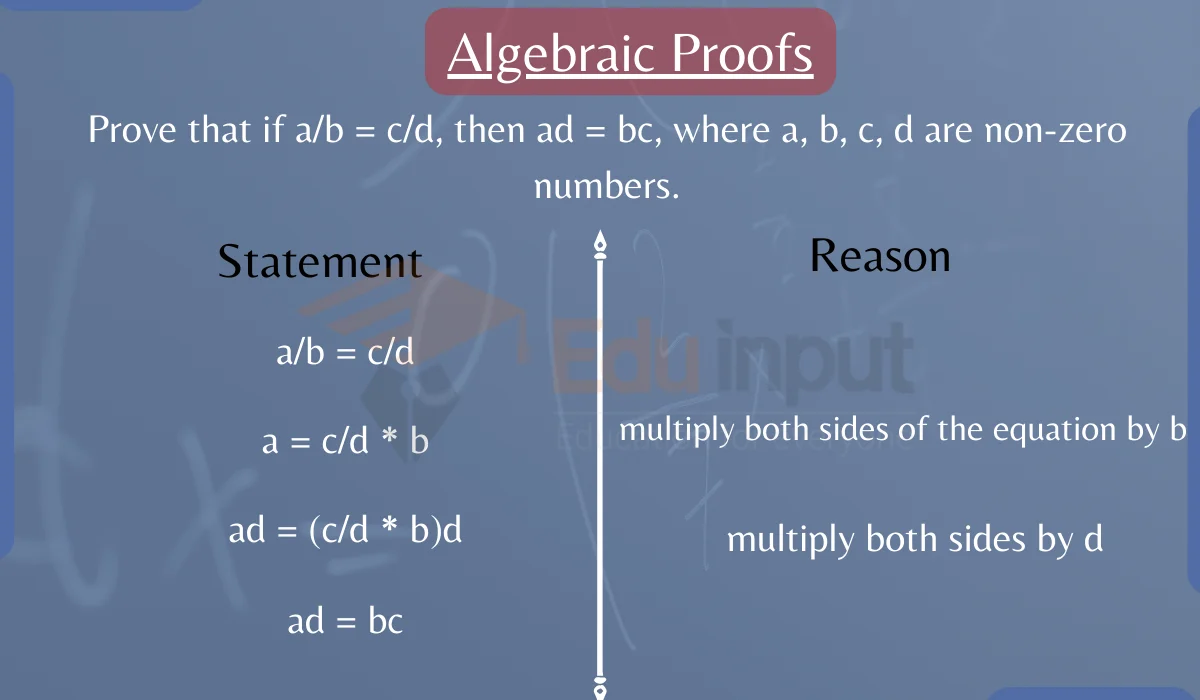

Linear algebra forms the foundation of vector spaces, transformations, and mathematical modeling. It helps solve systems of equations, analyze geometric structures, and optimize real-world problems. Key concepts include linear equations, linear combinations, and vector dependence.

Linear Equations

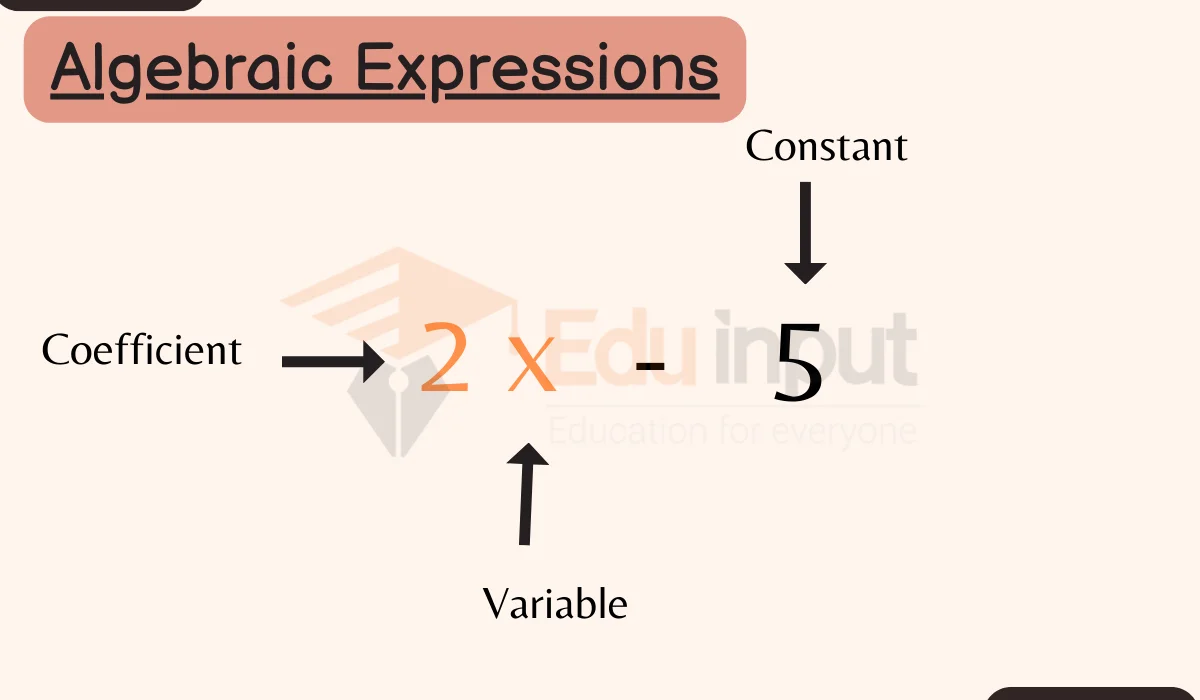

A linear equation represents a straight-line relationship between variables. The highest power of variables remains 1. These equations describe motion, forces, and financial models.

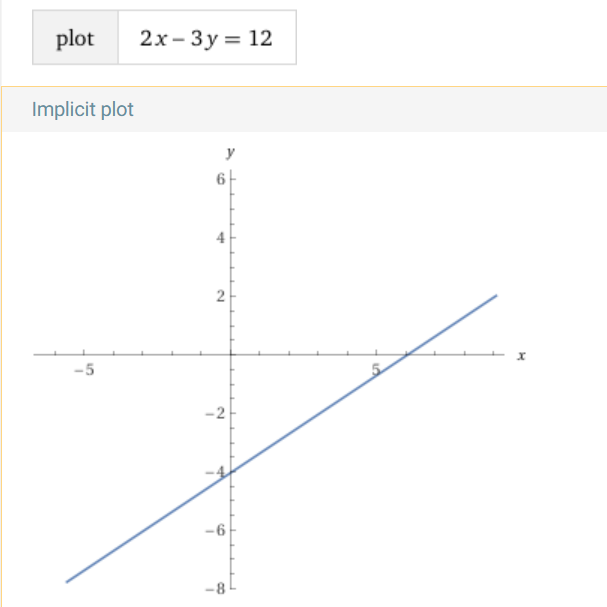

A linear equation follows the form ax + by = c, where a, b, and c are constants. The equation represents a straight line when plotted on a graph. Variables appear without exponents or multiplications between them.

A linear equation follows the form:

ax + by = c

Where a, b, and c are constants, and x and y are variables. The equation remains linear as long as variables are raised to the first power. If squared, cubed, or multiplied together, it becomes nonlinear.

System of Linear Equations

A system of linear equations contains multiple equations solved together. It determines unknown values that satisfy all equations simultaneously.

Example:

2x + 3y = 7

x − 2y = −3

Systems can have one solution, infinitely many solutions, or no solution.

Graphical Representation of Linear Equations

Each linear equation represents a straight line on a coordinate plane. The solution to a system is the intersection point of the lines.

- One solution: Lines intersect at a single point.

- No solution: Lines are parallel and never meet.

- Infinite solutions: Lines overlap completely.

A plane represents each equation in three dimensions, and their intersection defines the solution set.

Linear Combinations

A linear combination expresses a vector as a sum of scaled vectors. It forms the basis for spanning vector spaces and solving equations.

General form:

a1v1 + a2v2 +⋯ +anvn

where a1, a2, …., an are scalars, and v1, v2,…,vn are vectors. Linear combinations determine if a set of vectors spans a space. If every vector in a space can be expressed as a combination of given vectors, they form a spanning set.

Linear Dependence and Independence

Vectors are either linearly dependent or linearly independent based on their relationships.

- Linearly dependent vectors: At least one vector can be expressed as a combination of others.

- Linearly independent vectors: No vector depends on any others in the set.

Mathematically, a set of vectors {v1, v2,…,vn} is dependent if there exist scalars a1, a2, …., an, not all zero, such that:

a1v1 + a2v2 +⋯ +anvn =0

If the only solution is all scalars being zero, the vectors are independent.

Leave a Reply